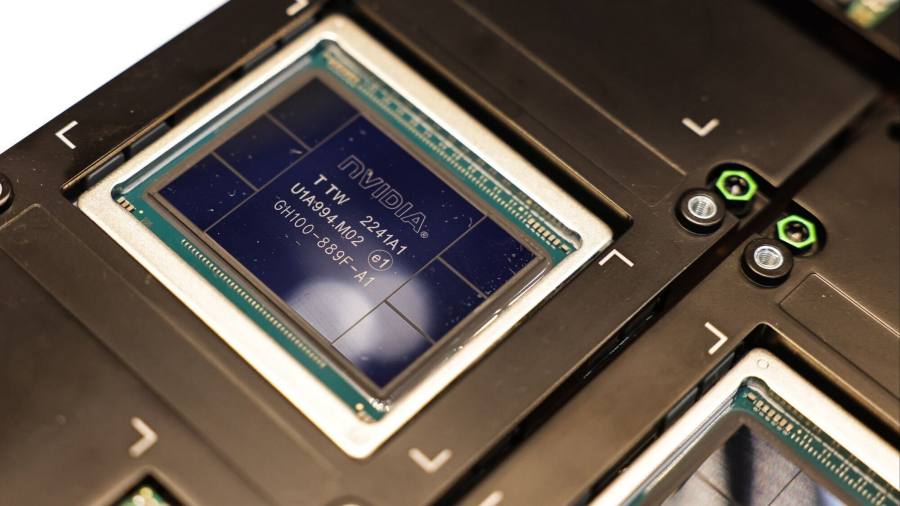

Investors are set to assess whether overwhelming demand for artificial intelligence products can help offset a slump in global PC sales when Nvidia reports quarterly results on Wednesday.

The US group said in its previous earnings report that demand for its processors to train large language models, such as OpenAI’s ChatGPT, would raise revenue by about two-thirds and help quadruple its earnings per share in the three months to the end of July. . .

The world’s most valuable chipmaker now plans to at least triple production of its top-of-the-line H100 AI processor, according to three people close to Nvidia, with shipments of between 1.5 million and 2 million H100s in 2024 representing a huge jump from the 500,000 expected this year.

With AI processors already sold through 2024, the massive thirst for NVIDIA chips is hitting the broader market for computing equipment, with major buyers pouring investment into AI at the expense of general-purpose servers.

Foxconn, the world’s largest contract electronics maker by revenue, last week predicted very strong demand for AI servers for years to come, but also warned that overall server revenue will decline this year.

Lenovo, the largest PC maker by units shipped, last week reported an 8 percent decline in its revenue in the second quarter, which it attributed to demand for soft servers from cloud service providers (CSPs) and a shortage of artificial intelligence processors (GPUs).

“[CSPs] They shift their demand from traditional computers to AI servers. “But unfortunately, the supply of the AI server is limited by the supply of the GPU,” said Yang Yuanqing, CEO of Lenovo.

Taiwan Semiconductor Manufacturing Co., the world’s largest contract chip maker by revenue and exclusive producer of Nvidia’s cutting-edge AI processors, predicted last month that demand for AI server chips would grow nearly 50 percent annually over the next five years. But she said this was not enough to offset the downward pressure from the global technology downturn caused by the economic slowdown.

And in the US, cloud service providers such as Microsoft, Amazon and Google, which account for the lion’s share of the global server market, are shifting their focus to building their own AI infrastructure.

“The overall weak economic environment presents a challenge for solar providers in the United States,” said Angela Hsiang, vice president of Taipei-based KGI Brokerage. “Because every component of the AI servers needs to be upgraded, the price is much higher. Cloud providers are aggressively expanding their AI servers, but that was out of the question when the capital expenditure budgets were formulated, so this expansion cannibalizes other spending.

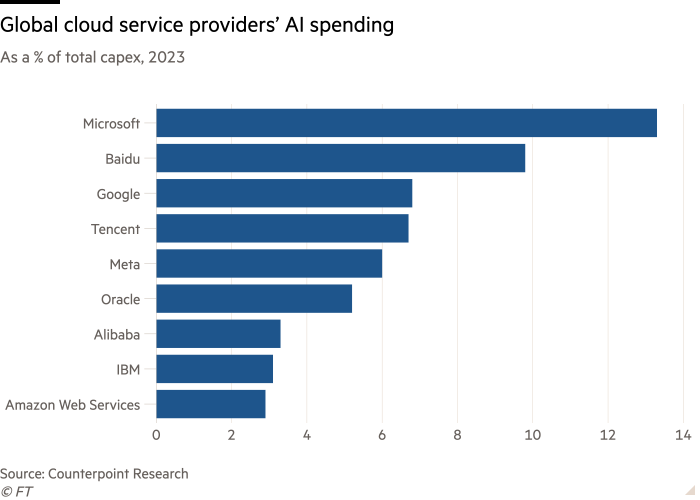

Globally, CSP capital spending is expected to grow just 8% this year, down from growth of nearly 25% in 2022, according to Counterpoint Research, as interest rates rise and companies shrink.

Industry research firm TrendForce expects global server shipments to drop 6 percent this year and expects a return to modest growth of 2 percent to 3 percent in 2024. It points to Meta Platforms’ decision to cut server purchases by more than 10 percent and to direct investment toward AI hardware, and delays in Microsoft’s upgrades to its general-purpose servers to save money for AI server expansion.

Besides the shortage of NVIDIA chipsets, analysts point to other bottlenecks in the supply chain that are delaying the AI harvest for the hardware sector.

“There is a lack of capacity in both advanced packaging and high-bandwidth memory (HBM), both of which are limiting production output,” said Brady Wang, an analyst at Counterpoint. TSMC plans to double its capacity on CoWoS, an advanced packaging technology required to make the Nvidia H100 processor, but has warned that the bottleneck won’t be resolved until at least the end of 2024. The main suppliers for HBM are SK Hynix and Samsung from South Korea.

The Chinese market faces an additional hurdle. Although Chinese telecom service providers such as Baidu and Tencent allocate a high percentage of their investments to AI servers such as Google and Meta, their spending is constrained by Washington’s export controls on Nvidia’s H100. The alternative for Chinese companies is the H800, which is a less powerful version of the chip that carries a much lower price tag.

A sales manager from Inspur Electronic Information Industry, a leading Chinese server provider, said that customers demand fast delivery, but manufacturers suffer from delays. “In the second quarter, we delivered 10 billion renminbi ($1.4 billion) of AI servers and received another 30 billion renminbi worth of orders… The most annoying thing is Nvidia’s GPU chips — we never know how much we can get,” he said. “.

But once the global economy improves and shortages subside, companies in the server supply chain can reap huge benefits, say company executives and analysts.

Brokerage firm KGI predicts that shipments of servers dedicated to training AI algorithms will triple next year, while Dell’Oro, a California-based technology research firm, expects the share of AI servers in the overall server market to rise from 7 percent last year to about 20 percent in 2027.

Because of the significantly higher cost of AI servers, “these deployments could account for more than 50% of total expenditures by 2027,” company analyst Baron Fung said in a recent report.

“For the supply chain, it’s just a multiple of everything,” said Hsiang of KGI. She said that with eight GPUs in a single AI server, the demand for motherboards with GPUs is bound to be higher than for general servers. The AI servers also need larger racks to put the processor units on.

The much higher power consumption of generative AI servers compared to general purpose servers also creates the need for different cooling systems and new power supply specifications.

Foxconn could be among the main beneficiaries of this shift because the group offers everything from various components to final assembly. Its subsidiary, Foxconn Industrial Internet, is already the exclusive GPU supplier for Nvidia.

For WiWynn, a subsidiary of Foxconn’s server rival Wistron, AI orders already account for 50% of revenue, more than double the percentage seen last year, according to Goldman Sachs.

Analysts also see a strong bullish trend for component providers. Goldman Sachs said in a report released in June that Taiwanese printed circuit board (PCB) maker Goldman Sachs could see AI servers jump from less than 3% of its revenue this year to as much as 38%. PCB content in AI servers via general purpose servers.

“Explorer. Unapologetic entrepreneur. Alcohol fanatic. Certified writer. Wannabe tv evangelist. Twitter fanatic. Student. Web scholar. Travel buff.”

More Stories

William Sonoma Inc. was ordered to pay more than $3 million in civil penalties

Long lines form and frustration grows as Cuba runs out of cash

The FCC's net neutrality vote affects your internet speed: We explain